everyweeks

The challenge was to create a picture, sound or video every week. I manufacture the software behind the algorithmic images in C++. If not specifically pointed out, all videos can run live as application.

I started this challenge in November 2015 but stopped in June 2016 since the Everyweeks were starting to take up most of my recreation time and I didn't want to neglect my real work. I will release sketches without fixed schedule at https://vimeo.com/album/2961417 instead.

please use the thumbnails to select an everyweek

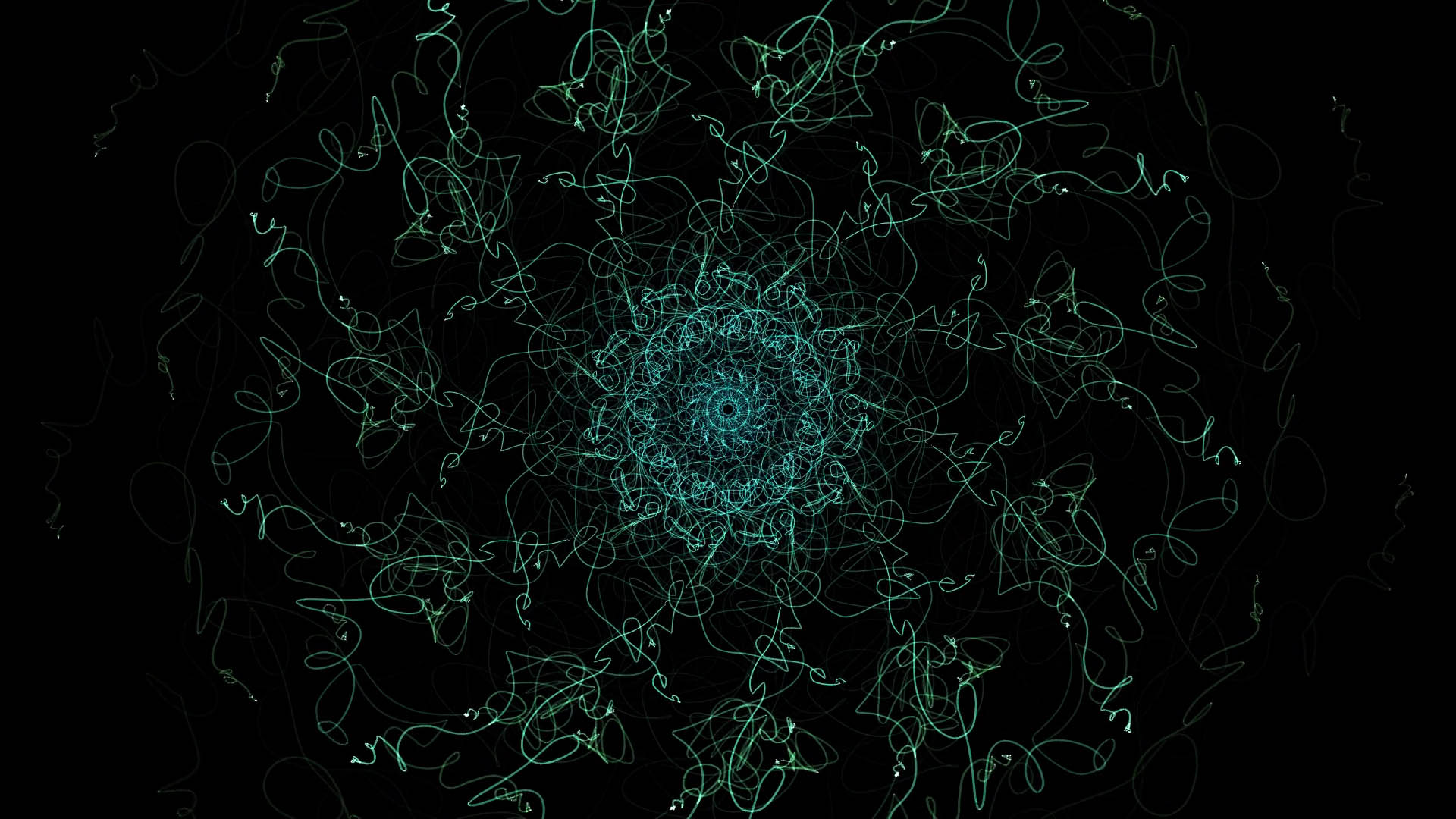

The Pond

a circular pattern of ropes

18/06/2016

Every rope creates a triangular sound wave. Two ropes each are pitched to the harmonics of a base sound, including one negative harmonic. Pitch and volume modulation of these monophonic sound waves are controlled by the physical attributes of the head of each rope. I used splines to smoothen the simulation.

Rose

feedback

10/06/2016

for Insa

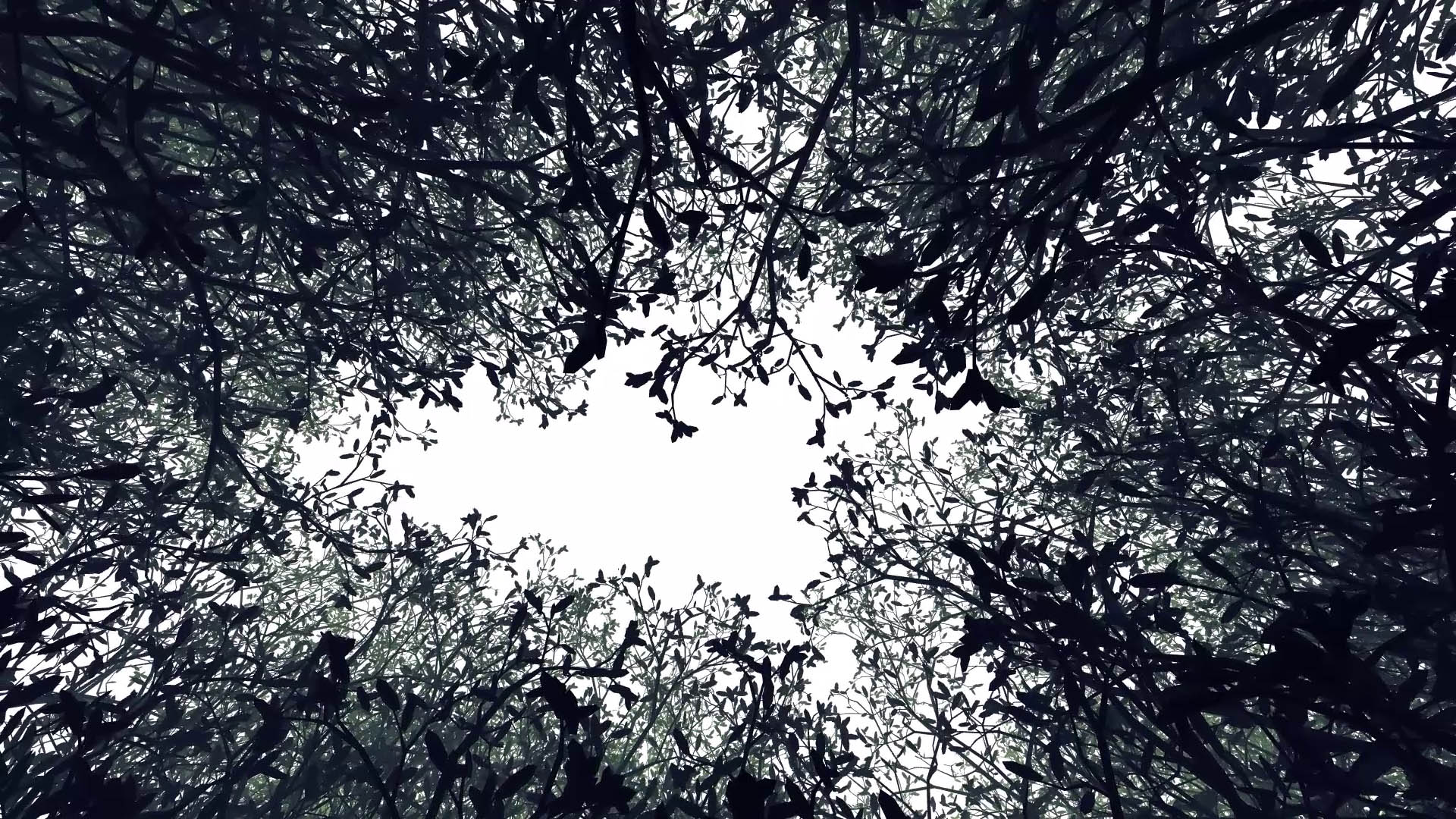

Wilderness

05/06/2016

Lying on the ground, looking up into the sky.

Bokeh (Study 1)

29/05/2016

This is a first study for simulating Bokeh (the way a lens renders out-of-focus points of light).

I used the OpenGL shading language to scatter point brightnesses based on a pinhole camera image. The depth of every pixel within the pinhole camera image determines how big the so-called “circle of confusion” of that pixel will be.

This approach is very slow and does not run at interactive rates since the shading language is much better at gathering than at scattering. For the next approach, I will be using OpenCL or a comparable language that allows scattering operations.

Heavy Rain

22/05/2016

This isn't a recording from our backyard. It's 256 small, very fast swinging ropes that almost sound like heavy rain hitting a roof.

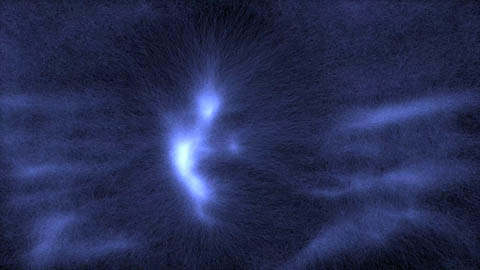

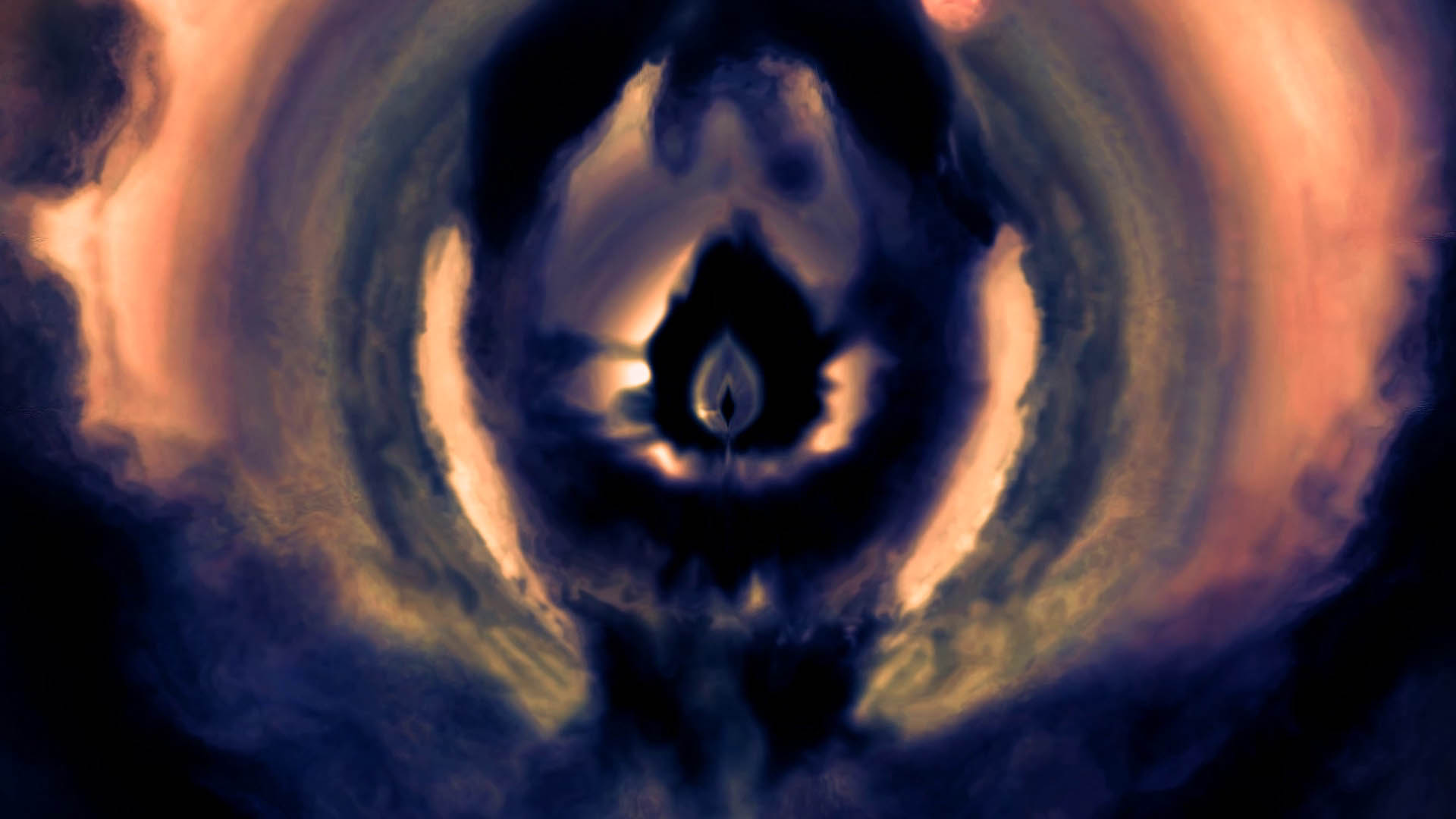

Nebula

A feedback nebula

15/05/2016

This image goes back to the tunnel-like analog feedback loops you can create when pointing a video camera at a monitor that is displaying the camera's image, just slightly zoomed in. The possible complexity of the resulting images has always fascinated me.

The problem with this kind of feedback is often that the screen goes either black or white after some time. You can keep it alive by blending with a kind of image seed as I do here with a candle-like function, but there is more to do.

I started out with the idea of a “stroboscopic” feedback loop to balance dark and bright areas. In every digital feedback step, the image gets zoomed and inverted. To create the final video, I only show you every second image so you do not see any flicker.

In addition, I am distorting the position where I am reading back the image, based on the image color content – something that would be very difficult to achieve in the analog world. Finally, I sharpen and colorize the image using the XYZ color space.

Enjoy the flight...

Edit: I included a small colorization upgrade three hours after release.

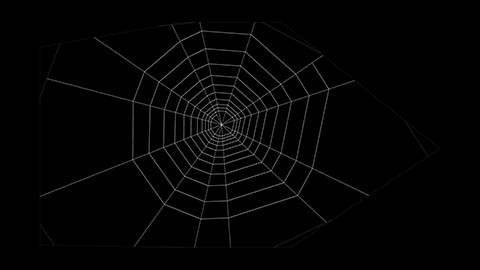

Spider Web

Artificial spider webs suited for physical modeling

09/05/2016

...then it appeared to me: spiders are aerial fishermen...

I mostly followed the approach by Schmitt, Walter and Comba but differ in that I create a web that is accessible to physical modeling for wind etc. That means that I create a web of interconnected nodes where, for example, sticky threads really are connected to the radial threads and so on. In the video, I added gravity and a little movement in the center of the net to show this.

The net is constructed in the following manner: Starting from a center point, I shoot threads into the environment to form a basic frame of anchor points. Then I randomly create some support threads. This is followed by shooting radial threads from the center and finally run in spirals to create the sticky spiral threads. There is, as always, a lot more left to be done. As in other approaches and due to time limitations, I did not implement the travel threads that go in-between the sticky ones.

This Everyweek was delayed since I had a bad cold last week. The net itself is a case of node/thread indexing paradise/nightmare, so it took some time to get it all right.

Thanks to Chris Wening for the inspiration.

Crickets

Synthesized sound of crickets at dusk

01/05/2016

I synthesized the sound of these crickets based on a cricket we recorded after sunset last year near the city I live in.

For the sound basics, I use four harmonics that I additionally pitch-bend upwards at the beginning of every chirp. The envelope for every chirp is a squared sine wave. The crickets chirp two to five times in rapid succession, then take a short – and sometimes much longer – break. Finally, I use a low-pass filter on far away crickets to simulate atmospheric effects and create more depth.

Special thanks to Lorenz Schwarz for the recording and taking a close listen.

Birds

24/04/2016

I often work very late at night and sometimes into the morning of the following day. On these occasions, I do enjoy the early morning singing of the birds. So why not give it a try and synthesize that moment?

The sound of the Syrinx (the bird's vocal organ) has been modeled by scientists to a very convincing degree. I mainly used the model described by Rodrigo Laje et al. in 2002 for all of the eleven simulated birds. You can find it at lsd.df.uba.ar.

Every bird has a specific distance from and placement around the listener. Reverberation is calculated per bird and increases proportional to its distance. I then use simple volume panning to establish a bird's direction. A final reverb is used to merge everything together in a single artificial space.

The singing is random but the singsong of the birds became a lot more realistic when I didn't choose the singing/breathing times and pitch interpolation times just randomly for every new cycle. Including previous values in the calculation allowed a more clustered arrangements of shorter and longer, lower and higher phrases.

Bloom

A bloom experiment

15/04/2016

This Everyweeek was inspired by the group “mercury” who use bloom in some of their demo scene productions. A straightforward 2-pass Gaussian blur proved to be too time consuming for high resolution images so I use the alternative approach with blurred mipmaps.

While the hand itself has a golden color, I calculate the bloom strength separately for red, green and blue components, creating a more fiery orange color fringe. I added a very small portion of motion-blur to the input images as well.

Chimes

Chimes created with additive synthesis

08/04/2016

For every chime I use seven sine waves with randomly crooked frequencies and decay times. One of those random harmonics has a frequency one octave below the base frequency. The sound of each chime is detuned according to the swing of the chime and I used a short delay to create a phasing effect.

If you want to know how to create an even more bell-like sound, you may want to look up the “Synth Secrets” article at Sound on Sound: soundonsound.com/sos/Aug02/articles/synthsecrets0802.asp

Feedback Delay Network

Artificial reverberation using a Feedback Delay Network (FDN)

03/04/2016

This reverb creates a lot less computational load than my previous implementations. Here, I use a version with 16 delay lines very close to the FDN design by Jot. More information on this model can be found at ccrma.stanford.edu/realsimple/Reverb/

A note: This FDN reverb does not include early reflections, i.e. the sound that the first few bounces off walls would generate. It simulates only late diffuse reverberation. Early reflections can be added to achieve a more realistic reverberation.

Thank you to Ida Nordpol for the voice.

Vocoder

27/03/2016

Vocoder effect using a Fourier transformation approach. The text is about the “strong law of small numbers”, modulated by a noisy part of the caleidoscope audio track:

As Richard K. Guy observed in October 1988, "There aren't enough small numbers to meet the many demands made of them." In other words, any given small integer number appears in far more contexts than may seem reasonable, leading to many apparently surprising coincidences in mathematics, simply because small numbers appear so often and yet are so few.

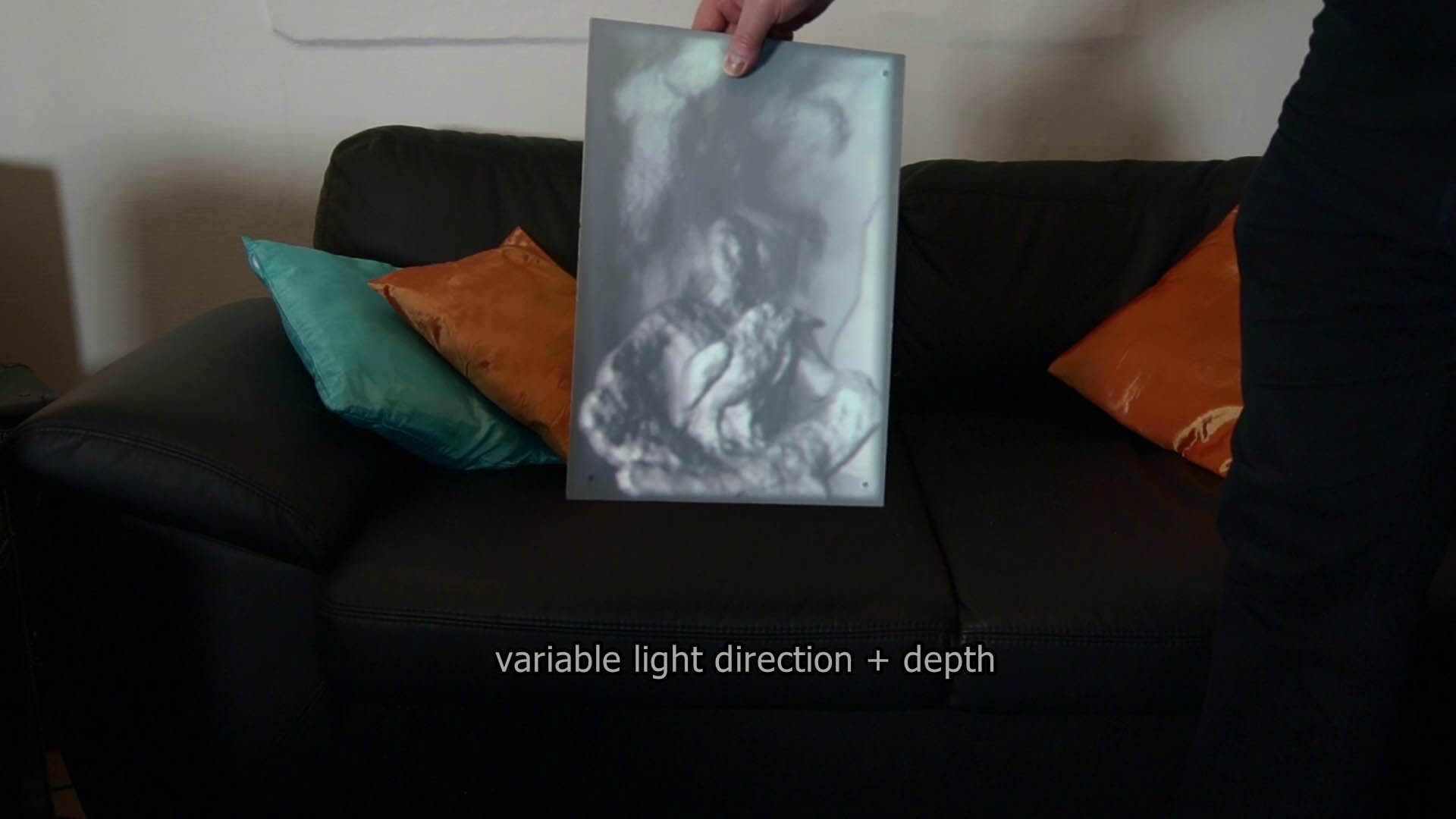

Projection Mapping

20/03/2016

A short demonstration of projection mapping on a moving surface. I show three successive steps:

- Mapping with fixed lighting – a precomputed texture is projected onto the surface

- Mapping with variable lighting – directions of light sources are accounted for in a live fashion

- Mapping with variable lighting and depth – depth is simulated assuming a certain camera position

Position-tracking is done using three small infrared-LEDs on the board and some modified Playstation 3 eye cameras with infrared filters.

Special thanks to Lorenz Schwarz for his assistance in the production of this recording.

Spiral

13/03/2016

A very colorful spiral.

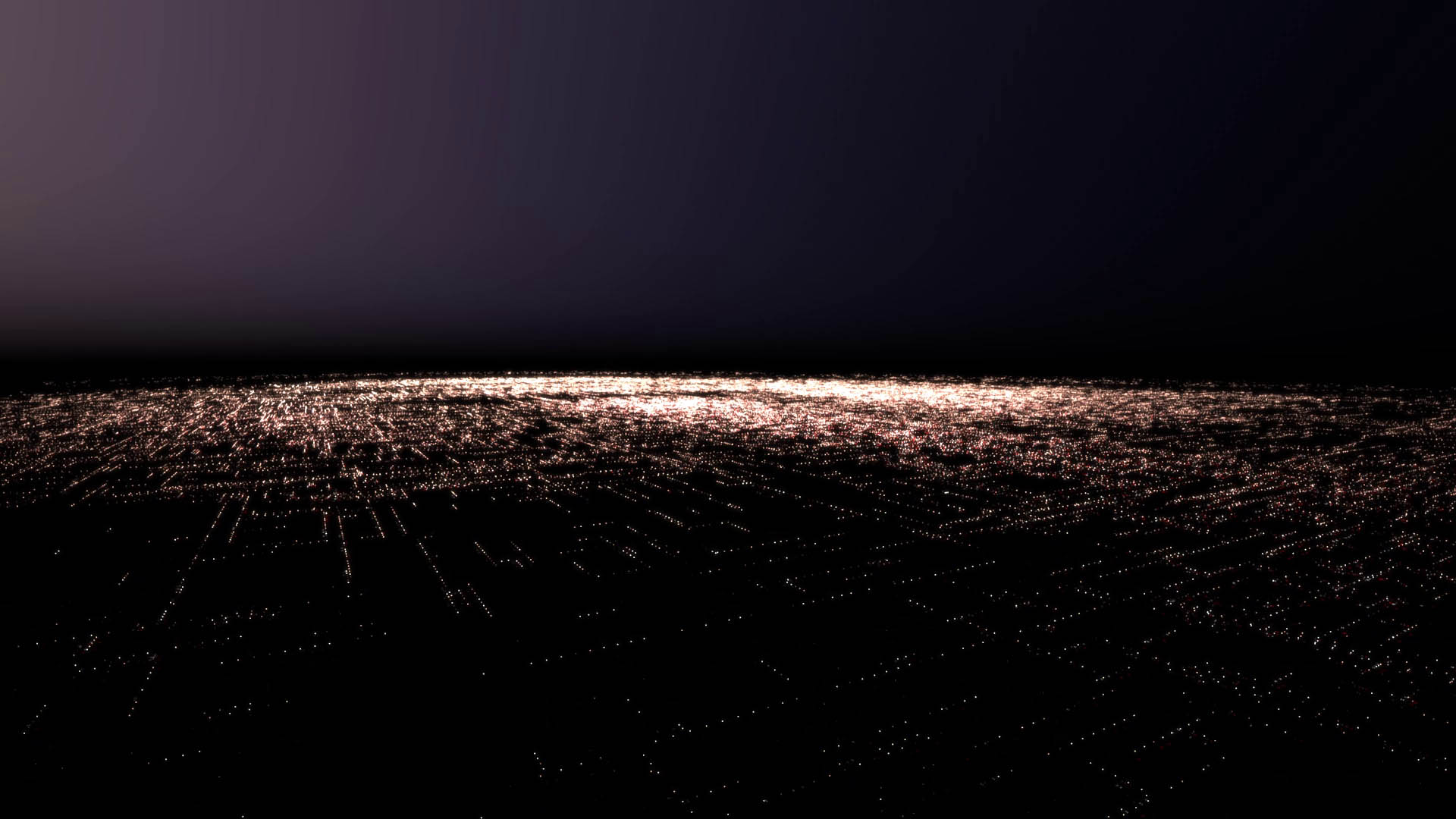

City Lights

06/03/2016

A flight across a desert city that turns out to be something else.

P.S. The final image was the only one that I could access quickly.

Harp

28/02/2016

An artificial pentatonic harp. The 19 individual strings of the harp are based on physical modeling (mass-spring-system). In this screencapture, I control them interactively by waving my hand in front of a camera. Low sounding strings are positioned on the left, high sounding strings on the right.

I used strings with the same tension but varying length (number of springs). The sound of each string is created by a single virtual pickup quite close to the attachment point of that string. The image you see is a filtered difference image (i.e. what changes from frame to frame) that forms the basis for tracking the hand.

Strange River

21/02/2016

Just playing around with Halos. The original footage is from the river Neckar.

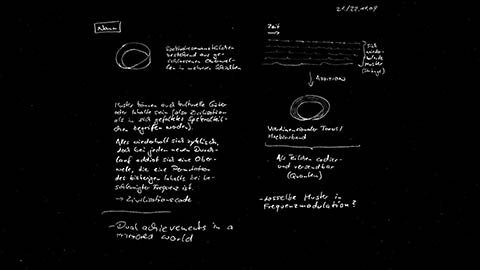

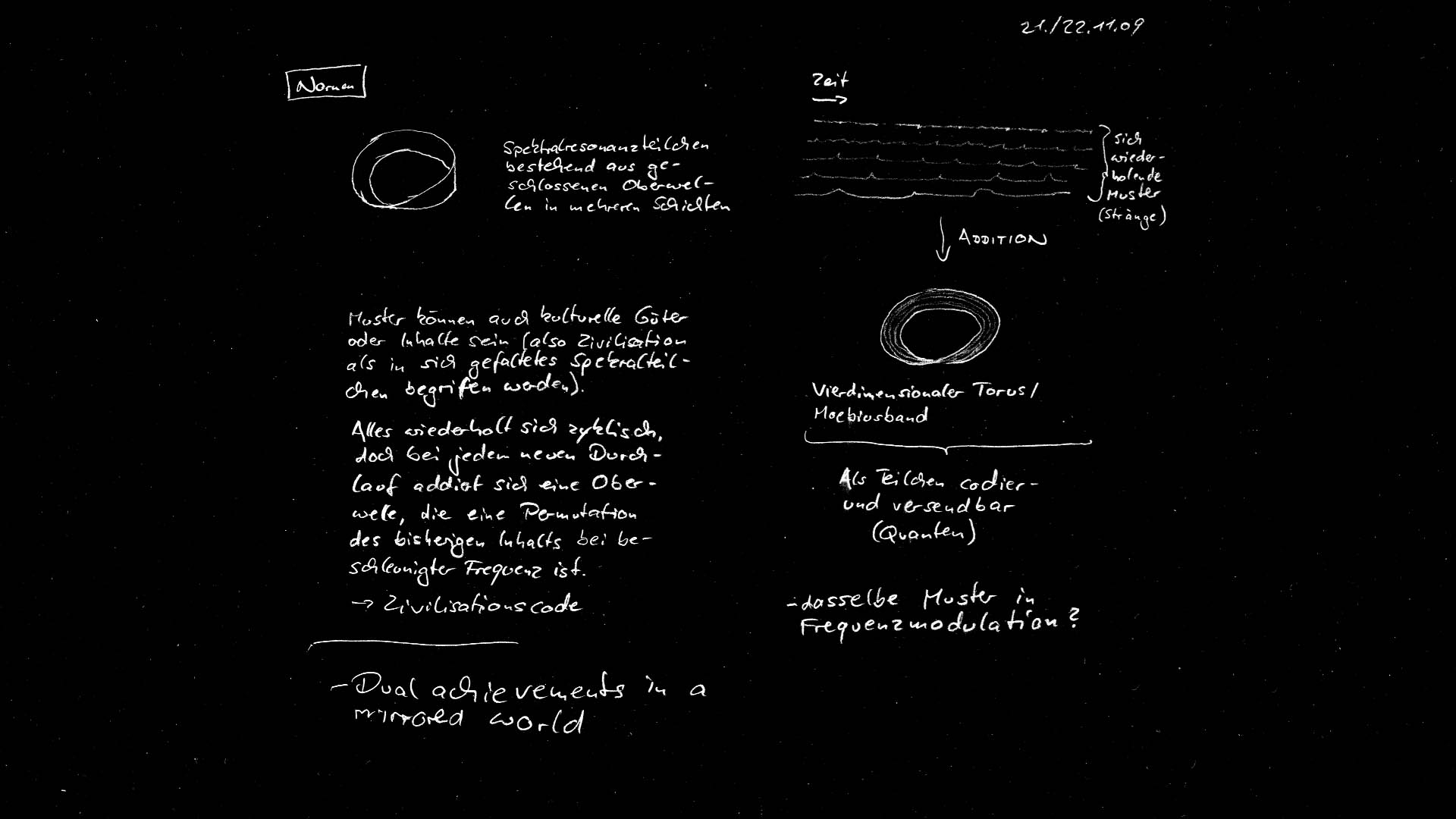

Norns (Notes)

14/02/2016

A note on the Norns, from quite a while ago... If our reality was woven by threads, what could they possibly look like? Here I assume that reality is enclosed in a single container of some sort, that reality is encoded as what I call a spectral resonance particle. The following is a direct translation of the text from my note page:

Norns: Spectral resonance particle consisting of closed harmonics in multiple layers

Patterns may also be cultural assets or content (so civilization can be understood as a spectral particle, folded into itself). Everything is cyclically repeating, but with every cycle a harmonic adds up that is a permutation of previous content at an increased frequency.

→ civilisation code

Time → Repeating patterns (strings) → Addition → Four dimensional torus / Moebius strip → Can be encoded and sent as particle (quanta)

- the same pattern in frequency modulation?

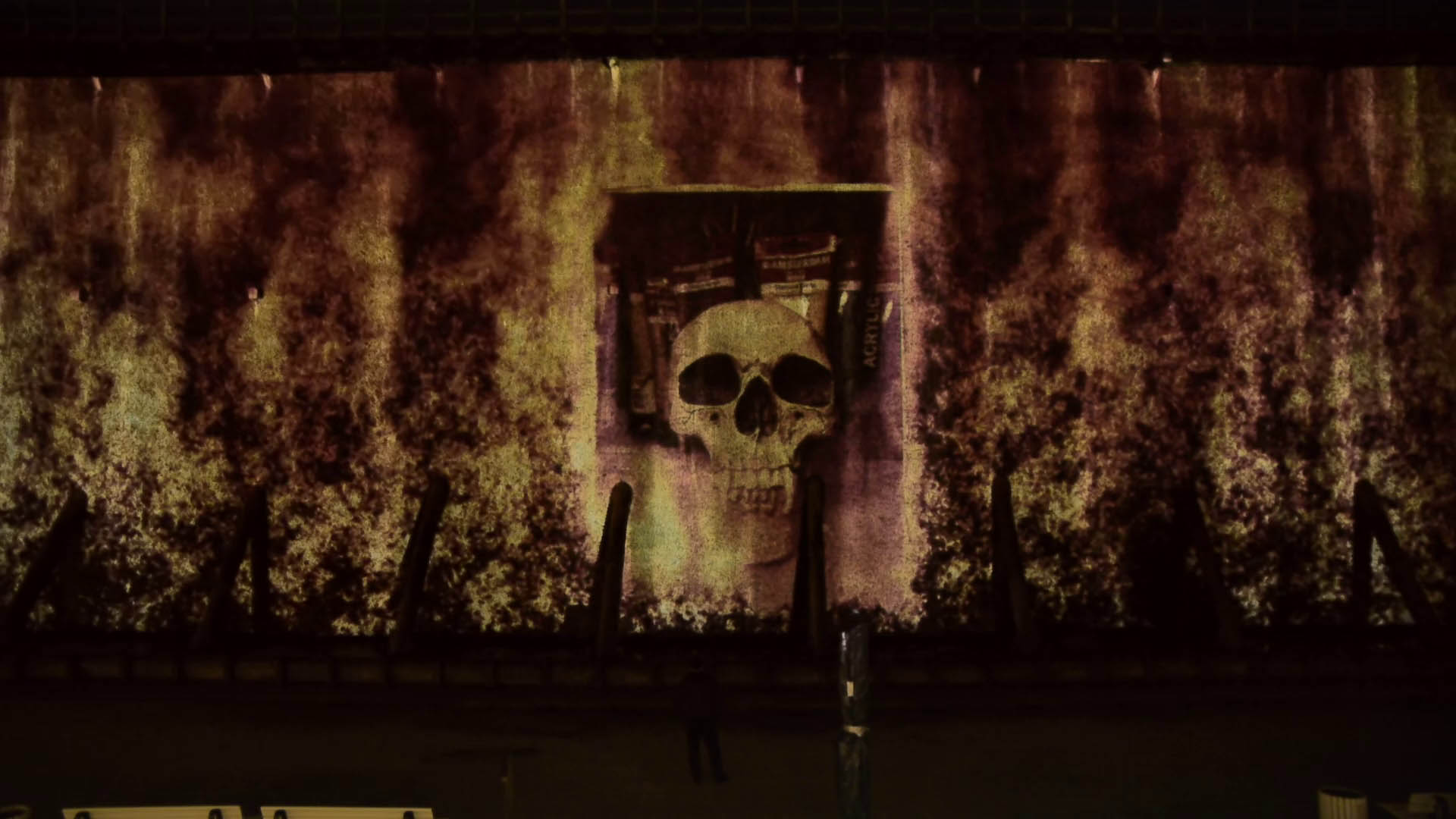

Feuerwall (Sneak Peek)

07/02/2016

This is a sneak peek on the documentation of my installation "Feuerwall". The installation was part of the fifth lichtsicht projection biennial in Bad Rothenfelde, which ended today.

Camera by Marc Teuscher, skull photo by Ida Nordpol

You can find more information on the installation at foerterer.com/feuerwall and lichtsicht-biennale.de

Norns

31/01/2016

Three Norns twine the thread of fate, according to German mythology: Wyrd (that which has come to pass), Verðandi (what is in the process of happening) and Skuld (debt, guilt). With this idea in mind, I explored what it would look like if images were woven by threads.

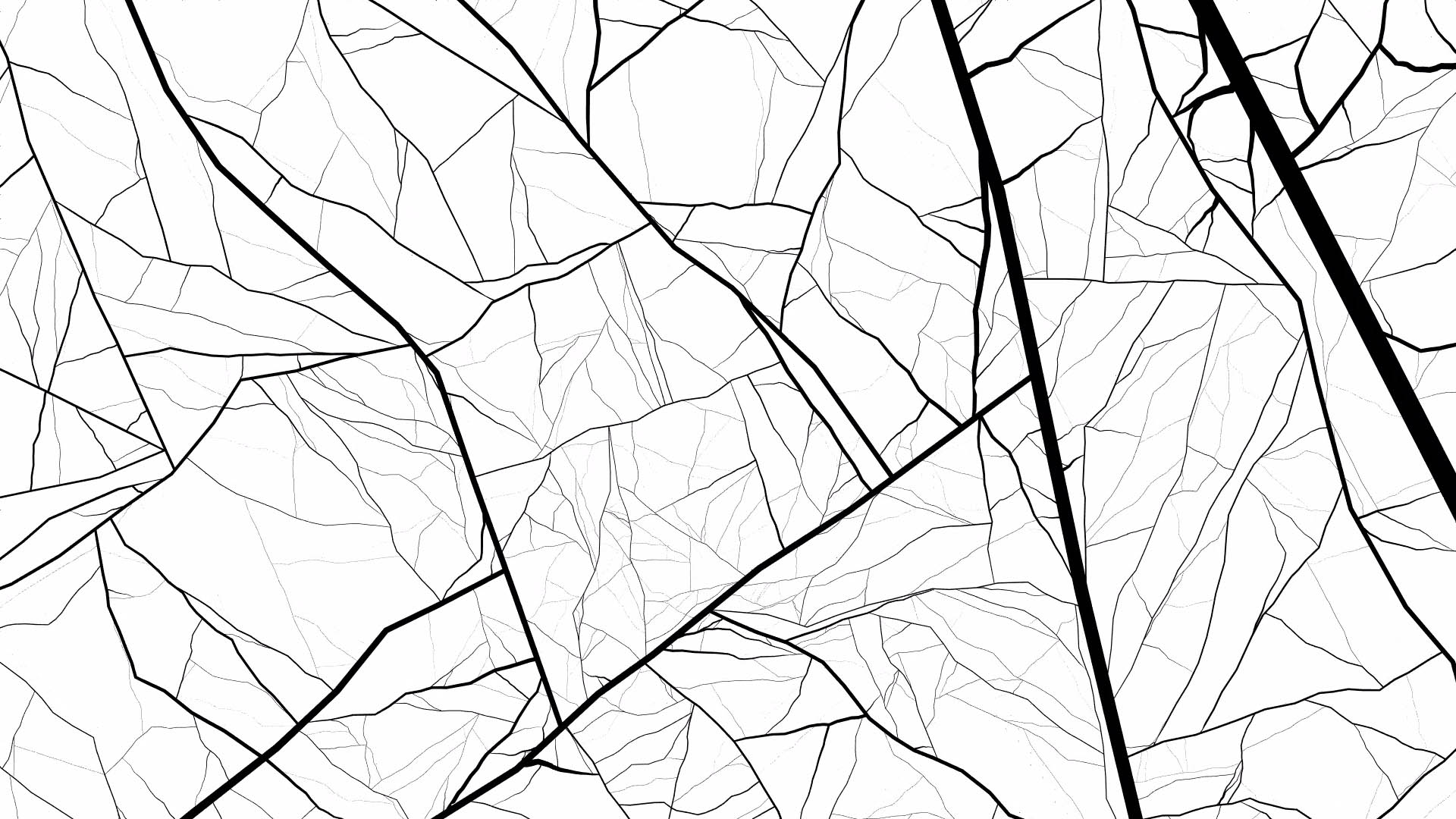

Cracks (Study 2)

24/01/2016

This is the second study of a more complex picture: An ice surface that undergoes endless fractionation without ever breaking completely.

The lines now form indentations of varying with and depth. I added Perlin Noise that zooms and rotates with the lines. The noise delivers the basis for surface parameters like distortion, height, color and shininess.

You can find the first study here: vimeo.com/151312235

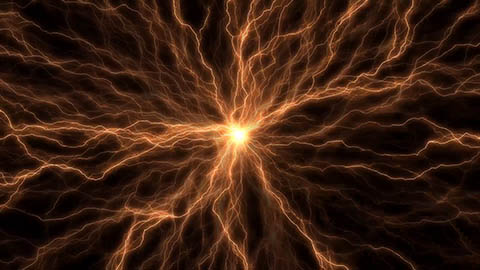

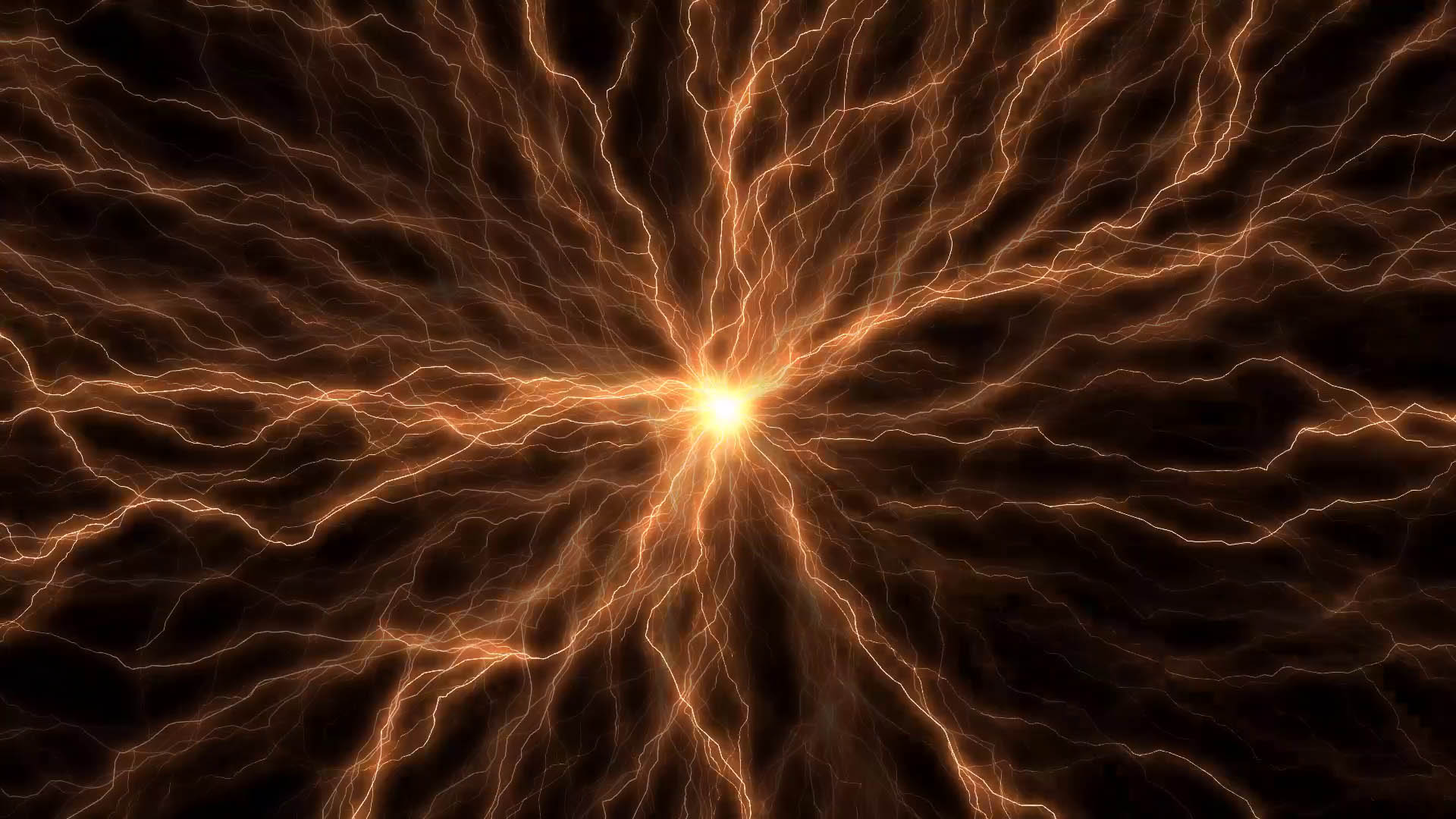

Flashes

Electrical discharge simulation reacting to audio.

17/01/2016

Ambient track by Holger Förterer and Ida Nordpol (Vocals, Car Wash) with additional help from Chris Wening. Street recordings are from Karlsruhe in the night of Halloween 2012.

Cracks (Study 1)

10/01/2016

A flight into a surface that slowly cracks up more and more. This is the first study of a more complex picture: An ice surface that undergoes endless fractionation without ever breaking completely.

Depending on the parameters, this picture can look like a flight above fields or rivers, the picture of a retina or plant, or simply cracking ice/rocks.

Inspiration: Ida Nordpol

You can find the second study here: vimeo.com/152907606

Snow Crystal

03/01/2016

Happy new year... Winter has finally arrived and I wanted to program a simple snow crystal. I found an algorithm by Gravner and Griffeath at psoup.math.wisc.edu/papers/h3l.pdf that I tried to program in OpenCL. I think there is more left to explore. Great real crystals can be found at snowcrystals.com

P.S. The video is a time-lapse recording, sped up by a factor of 20.

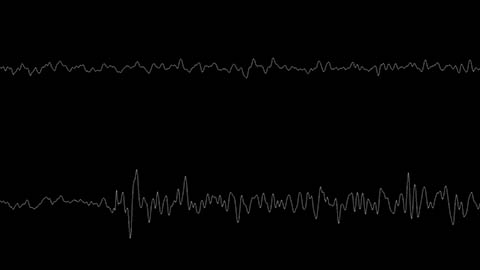

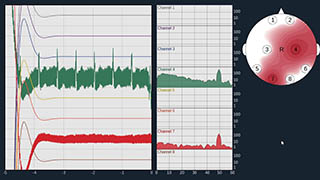

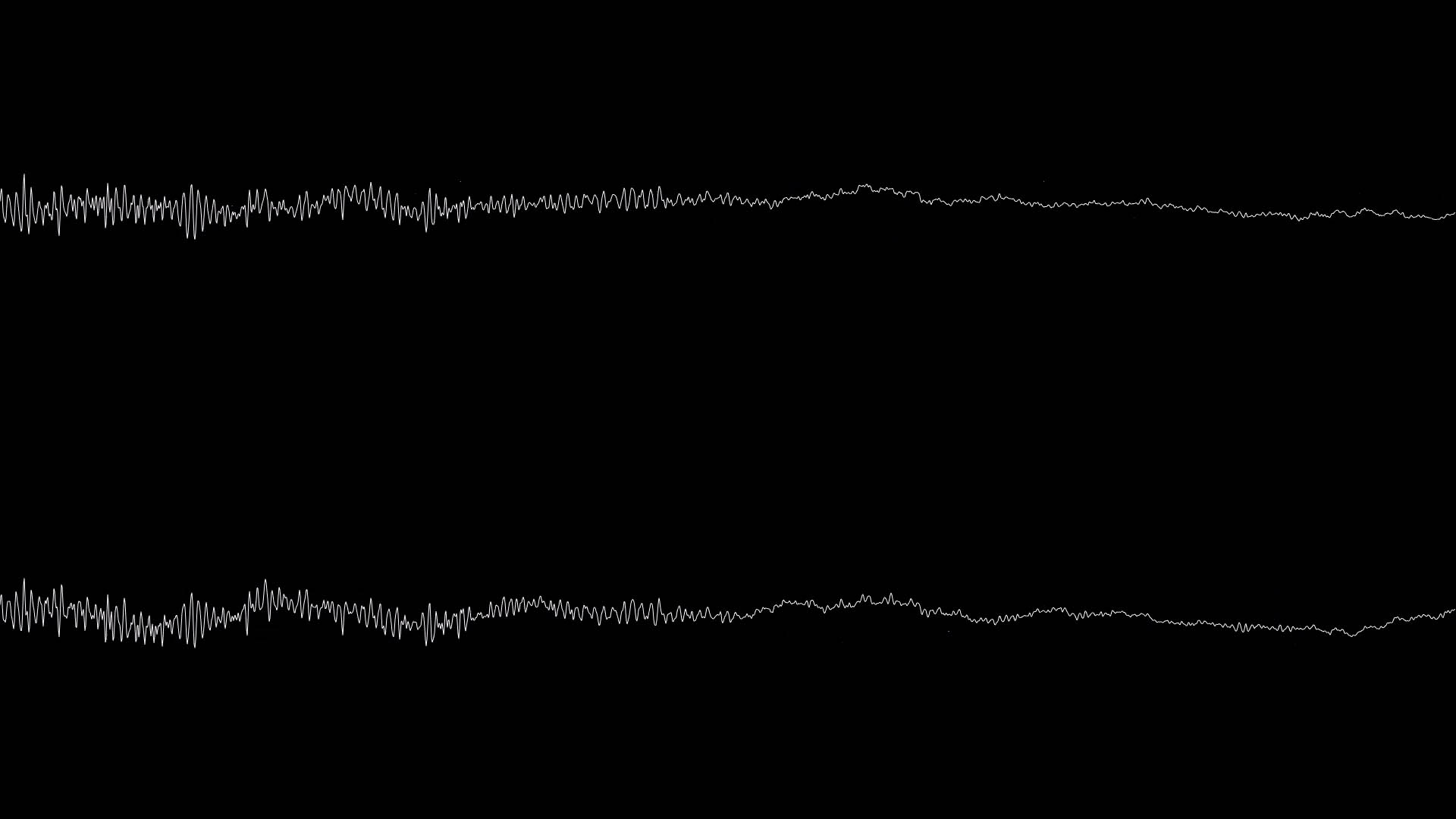

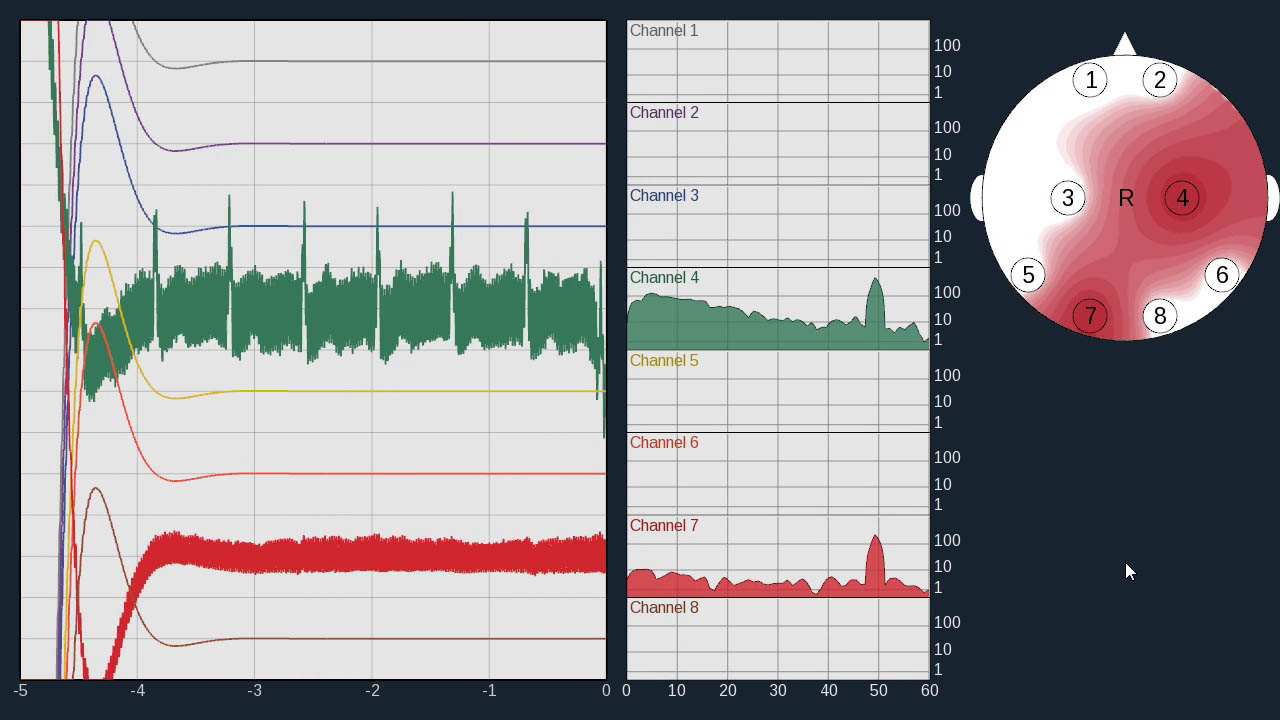

Heartbeat

26/12/2015

In 2016, I want to explore the possibilities of using brainwaves and EKG in the creation of artworks. This video is more of a technical preparation for the things I want to develop later on. What you can see is my heartbeat, captured by an OpenBCI 32-Bit interface (OpenBCI = Open-Source-Brain-Computer-Interface). The noise you see in the beginning is a 50Hz noise from the environment that then gets notch-filtered so that only the heartbeat remains. I then skip through some additional filters for the data.

Technically, I started with some C++/OpenFrameworks code by Daniel Goodwin to interface with my EEG/EKG capture board, then continued to port/adapt some more of the original Java Code of the OpenBCI user interface. I hope to port the full user interface in the coming weeks and publish the code to give others a starting point to use the brainwave hardware with C++/OpenFrameworks.

You can find more information on the OpenBCI hardware at openbci.com

Cloth

18/12/2015

I got captured by the beautiful golden shimmer of an Asian cloth yesterday and try to simulate it here.

Lighting

13/12/2015

Finger exercise: A simple lighting effect that reacts to the loudness of music. I analyze live sound and then feed Art-Net lighting control messages from my software through a grandMA onPC lighting software to control the DMX LED light on the right.

The music is an excerpt of Greg Hunter (DUBSAHARA) Live @ MEME 2013 by MEME Festival and was licensed under a Creative Commons Licence. I downloaded it from soundcloud.com/meme-festival/greg-hunter-dubsahara-live today.

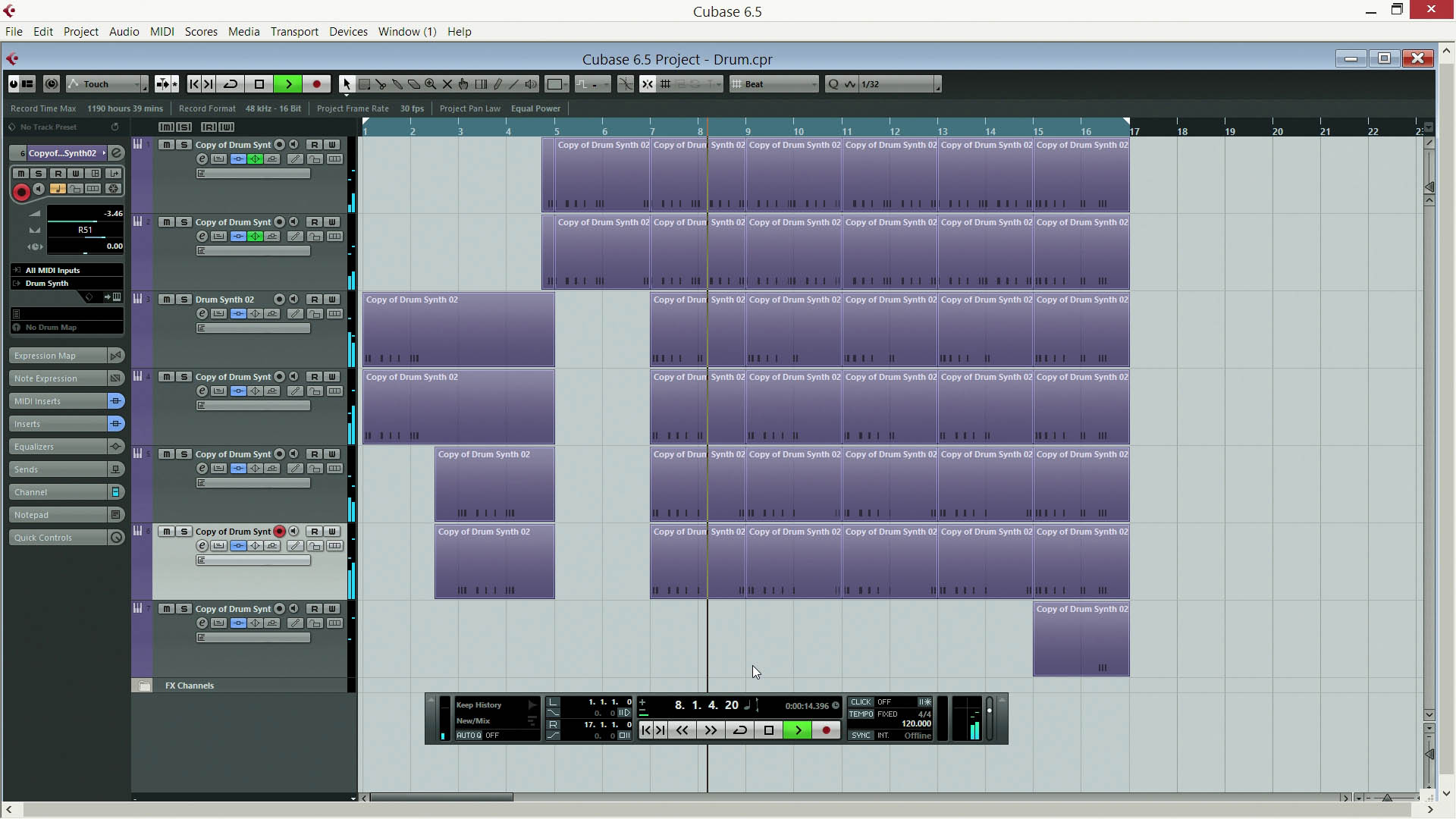

Drum

06/12/2015

Proof of Concept for a Cubase VST3 Plugin. I wrote a physical simulation for drums and wanted to connect it to Cubase. I am reading note on triggers, simulate the drums and output their sound. This is more or less in preparation for a 64k Demo synth/sequencer, hopefully for later next year.

Blue Orange

27/11/2015

I wondered what else could be done with last week's video. So I stabilized the original video image, blurred, emphasized and shifted the chroma and added some motion blur. The sound is granular synthesis. Most of the approximately 8000 sound sources are controlled by the difference image of the stabilized video.

The original video is at vimeo.com/146579433.

Green Sea

22/11/2015

Frederik Busch took a slow motion video of the stormy Baltic Sea today. The colors were almost completely gray so I tried my DIN99d Lab color system on it to get a better feeling for that color system.

The original video is at vimeo.com/146579433.

Hive

15/11/2015

Experimenting with sound again. Every light dot has a sound attached to it. The depth position of the particle is the start position within a long sample, from front to back. To keep calculations simple, I only calculated panning and an approximation of the Doppler effect. The text recording is very old. If I'm not mistaken, I whispered a text by Vilém Flusser.

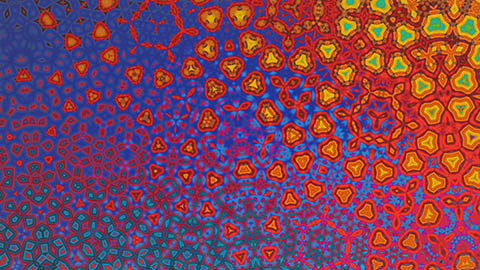

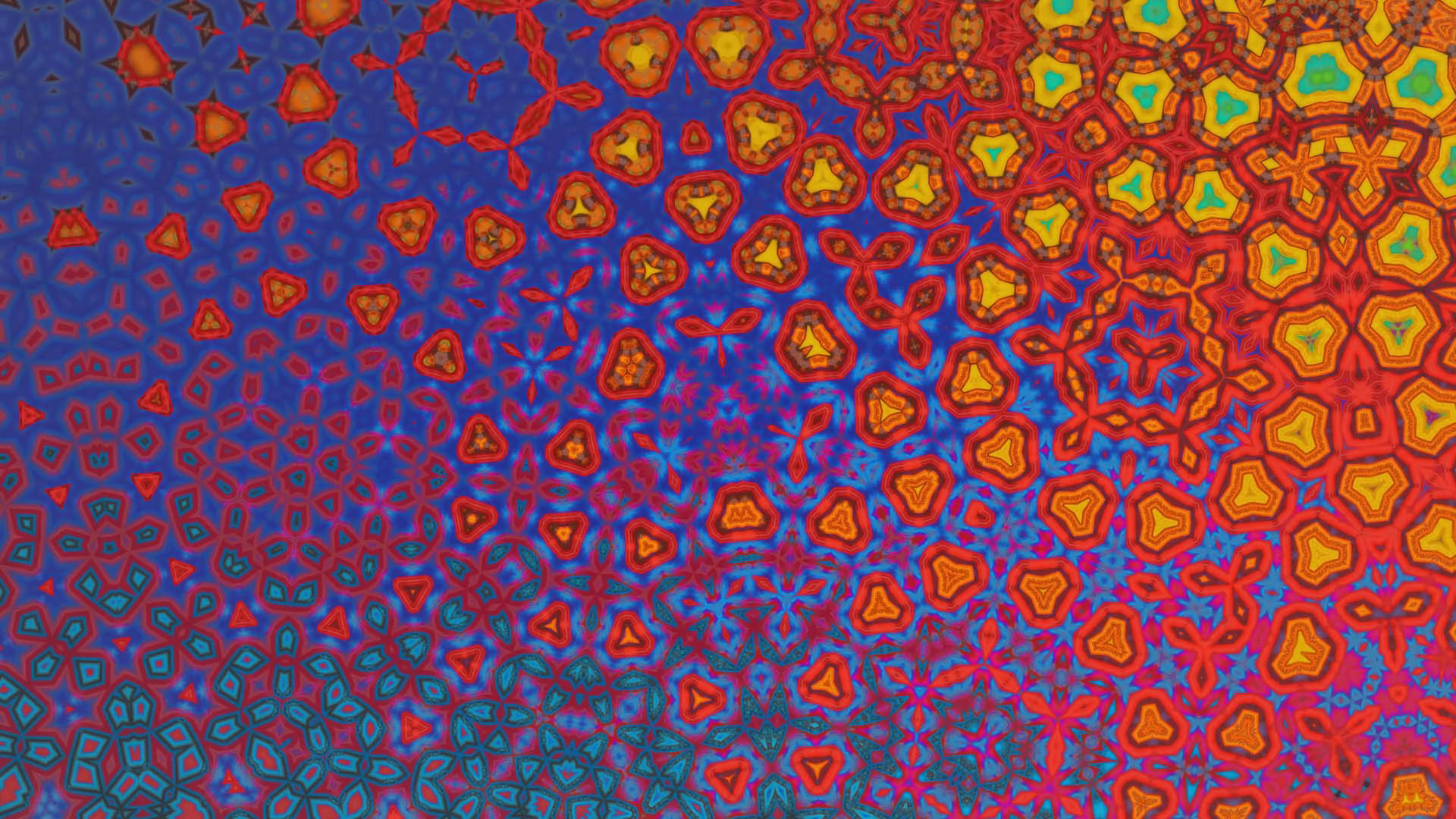

Caleidoscope

08/11/2015

This is the current state of my fractal caleidoscope effect. It normally uses live video and is best viewed on a wide-gamut TV. I created some quick ambient track with Cubase to put in the background today. The base video is a collection of sweeps across colorful fabric and other pictures.

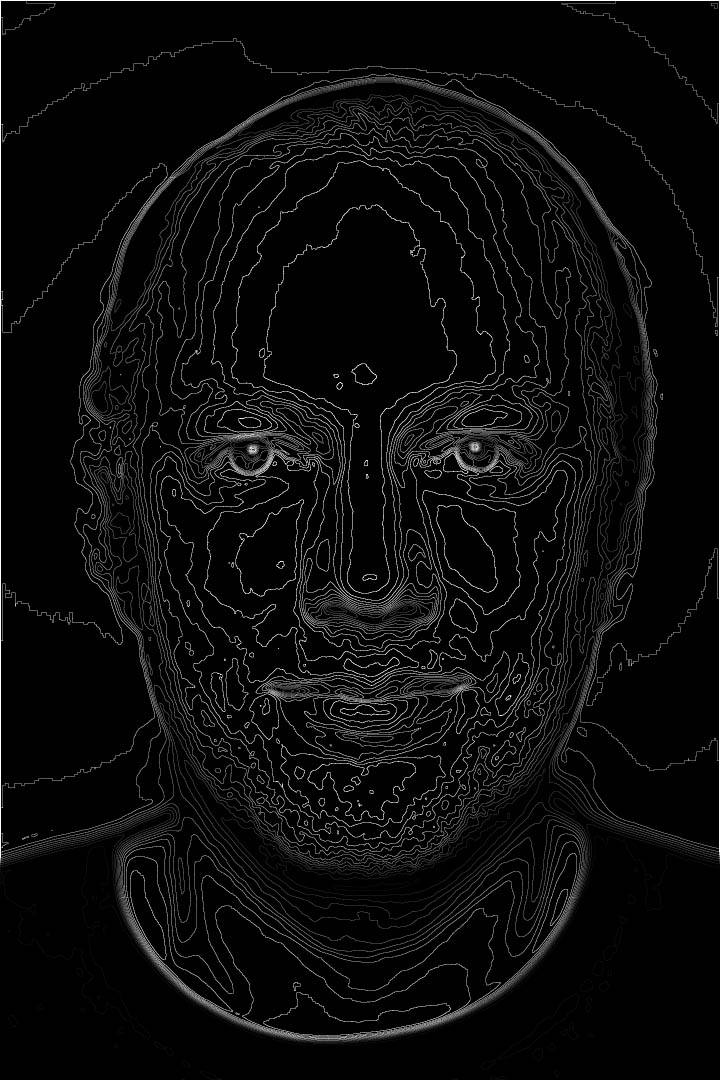

Portrait

01/11/2015

I implemented a Marching Squares contour algorithm lately and thought that playing around with it might do for a beginning. The original Photo was taken by Frederik Busch in 2009.

This is my first "Everyweek". The challenge is to create a picture or sound or video every week.